From Telegrams to Datagrams

Is improving internet access still a priority towards reducing poverty?

Thirty-six years ago, computer scientists at CERN made a pivotal decision regarding worldwide internet access. They decided that the TCP/IP protocol they adopted to connect computers within the physics labs in Geneva would be used to connect other computers outside of CERN. Unlike other competing standards at the time, such as ISO and OSI, TCP/IP was a framework that was not decided by a committee, or a board of shareholders. Instead, it was adopted initially by a small networking group within CERN because OSI was not ready, had too many layers – 7 instead of 3 – and was expensive. The TCP/IP Protocol, though it was first specified between 1974 and 1978, was first tested between University College, London, and Stanford University in 1975. The first two-network TCP/IP radio connection occurred in 1976 between the Packet Radio Van & SRI, and a 3-way connection between SRI’s Packet Radio Van, UCLA, and London. Norway’s NORSAR/NDRE, the US, and the UK, also completed a 3 link network in 1977. By 1982, the Department of Defense declared TCP/IP as the standard for all military computer networking. Soon afterwards, IBM, AT&T, and DEC adopted TCP/IP even while having their own proprietary protocols. While other internet service providers existed, such as France’s Minitel, in 1980, the only international computer networks operated from a handful of universities.

The first inter-city ARPANET connection, a predecessor to TCP/IP, occurred on October 29th, 1969 between Los Angeles (UCLA) and Palo Alto (Stanford’s SRI). The technical conception of the modern internet originated largely from J.C.R. Licklider’s 1962-1964 memos at Bolt, Beranek and Newman, a Cambridge, Massachussetts R&D company, which, if the concept “Intergalactic Computer Network” was coined by anyone other than a competent ARPANET employee, might have sounded like they were scribbled ideas under the unsuspecting influence of Sidney Gottlieb’s LSD administration. The first ARPANET letters transmitted were “lo” from “login,” before the one of the computers could complete the rest of the transmission and crashed.

From “What hath God wrought” to “Lo [& Behold]”

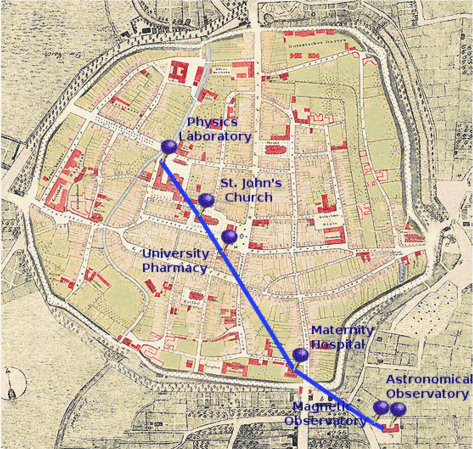

Samuel Morse’s famous, 38-mile telegraph message from Washington, DC to Baltimore in 1844 was not the first electromagnetic telegraph message, but it is probably the most well known. Among the first was a 3 mile needle telegraph by Baron Schilling that was successfully demonstrated in 1832, but he died in 1838 before it could be put into service. Weber and Gauss developed in 1833 a 1.2km electrical telegraph to connect an astronomical observatory just outside the town of Göttingen and the Institute for Physics in the town centre:

Yet an even earlier telegraph that used electricity, but not magnetism, was an electrostatic telegraph consisting of 8 miles of iron wire developed by Francis Ronalds in 1816 in the basement garden of his mother’s home in Hammersmith, UK.

It was never commercialized, despite offering it to the British Admiralty, as it was said to be “unnecessary,” but this was shortly after the Napoleonic Wars, where funds and enthusiasm were most likely lacking. According to Wikipedia,

“He found that the signal travelled immeasurably fast from one end to the other (but still believed the speed was finite).[17][page needed] Foreshadowing both a future electrical age and mass communication, he wrote:

electricity, may actually be employed for a more practically useful purpose than the gratification of the philosopher's inquisitive research… it may be compelled to travel ... many hundred miles beneath our feet ... and ... be productive of ... much public and private benefit ... why ... add to the torments of absence those dilatory tormentors, pens, ink, paper, and posts? Let us have electrical conversazione offices, communicating with each other all over the kingdom .[17]

Several more early electrical telegraphs were independently developed by inventors in the early 1800s and even in the 1700s. In 1774, the Genevan Georges-Louis Le Sage developed a telegraph with 26 wires for the alphabet connecting two rooms of his house. A 1753 anonymous letter to Scots’ Magazine, said to be the oldest magazine still in existence, described a method of communicating through a wire for each character of the alphabet:

Indeed, 26 wires for sending a telegram today certainly sounds like a fire hazard. Subreddits dedicated to ethernet switch cable wiring are a way to share horror stories. Fortunately, the industry is developing newer, lower voltage cabling that can replace everything from elevator communication wires to industrial control pipelines.

The goal for reducing the cost for communication lives on..in new forms

In 1876, the first audio transmitted over a telephone was “Mr. Watson, come here, I want to see you” between two rooms in Alexander Graham Bell’s lab. Nearly 20 years earlier, the first transatlantic telegraph from Ireland to Newfoundland was sent by Queen Victoria to President Buchanan. Despite taking 16 hours to send 98 words, it was 15 times faster than steamship. By the 1860s, facsimile machines transmitted over telegraphs called pantelegraphs could send images and signatures. By 1881, the first international call was made from New Brunswick to Maine. By 1892, a nearly 1000 mile phone service between New York and Chicago opened. By 1915, the first transcontinental phone call – (3600 miles), from NY to SF, using a vacuum tube amplifier. By 1926, the first transatlantic call using submarine cables from London to New York was completed, and commercial service began in 1927. By 1951, direct distance dialing was introduced, no longer requiring the need to use telephone operators to route calls outside of a local phone company. By the 1960s, international direct dial was commercially available. Today, Google Voice international calls from the U.S. to Canada are free, and virtually all video international calls are free as well, billing just the miniscule data usage, if at all.

Much talk has been said over the lowered cost of telephone technology over the 20th century, and by the century’s end, the internet’s lower cost helped bring a new age of mass communication, more efficient and widespread than even television broadcasting. While offering an opportunity for a “public square,” the drive towards algorithms influencing visibility has driven many away from some of the most popular social networks and towards insular communities. In a 2014 academic paper by Ilkka Tuomi, global communications only became effectively possible when “optical fibers reduced the cost of communication by three orders of magnitude” and

“As the sharing of knowledge and meaning is now increasingly independent of location, shared values are becoming key drivers for social connectivity. Social cohesion is increasingly based on similarity of values, and in the virtual world it is increasingly easy to avoid conflict by sticking with people who share the same values. We are therefore returning to a social organisation that resembles the pre-industrial world. But whereas in the pre-industrial world proximity and physical space generated practical constraints for cohesion and collaboration, on the Internet it is easy to excommunicate those who have a different view of the world. The Habermasian public sphere is thus splitting into incompatible public spaces and the political impact is already clearly visible.”

The paper goes on to compare this phenomena to how Europe’s medieval organization of knowledge lost its dominance because of trade to remote locations and knowledge became increasingly universal.

“Natural sciences provided an exemplary case of context-independent knowledge, profoundly shaping beliefs about what knowledge is and how it can be acquired. Universal knowledge enabled mechanical replication both in sciences and in manufacturing, thus facilitating the rapid expansion of the industrial and science-based systems of production. This new mechanistic mode of production created the need for standardised skills and universal education.

For over a century, education was a high-speed line from the agrarian society to the modern urban world. Predictable life-paths and consumption patterns and rapidly advancing tools for mass production created a world of constant growth, measured as the quantity of production. In this world, progress was about removing scarcities and addressing basic needs, increasingly generated by the mass media. In this world, more was better, novelty was progress, and universal knowledge created the foundation that facilitated growth, jobs, and social cohesion.

Now, the universal model of knowledge is contested and the industrial world is in permanent decline. Blue-collar jobs have almost disappeared and many white collar jobs will follow suit as global real-time production networks require that humans are replaced by automated routines and computer algorithms wherever this is possible.

Although it is still commonly claimed that universality defines true knowledge, a more contextual view on knowing is gaining strength. Science itself is in crisis, as a rapidly growing fraction of research produces results that cannot be replicated. Indeed, it is now understood that the requirement of context-independent knowledge and reproducible experiments leads to a very specific model of nature. This model fits perfectly with those things that classical physics used as its prototypical cases; in general, however, it does not produce useful approximations of the world.”

Furthermore,

“In 1996, UNESCO defined the four pillars of learning as ‘learning to know’, ‘learning to do’,‘learning to be’, and ‘learning to live together’.4 In a heterogeneous world of knowing, these four pillars of learning need to be integrated in a new way.

Learning to know requires a capability to understand how knowledge organises individual and social lives. Beyond the skills to access existing knowledge, we need an active capacity to create knowledge and make sense of the world. We could call this skill epistemic literacy. Epistemic literacy helps us to cope with heterogeneous and dynamic knowledge landscapes. It means that we understand how knowledge is created and what constitutes the social basis for learning and education. It means that we know what a good argument is, and what counts as evidence. It also means that we understand how and why different worldviews are created and how these lead to epistemic power struggles. Epistemic literacy is becoming socially and individually important as the Internet is rapidly eroding historically evolved social boundaries, institutions, and systems of meaning. As the world of knowing becomes transparent, the taken-for-granted contexts for knowing disappear and have to be reconstructed. Without epistemic literacy and capability for critical reflection, this construction is driven by dogmas, orthodoxies, and fashions that have sufficient power to generate internally coherent systems of meaning, and separate those who are in from those who are out. Without a parallel development of epistemic literacy, the democratic transparency of the Internet creates a global world of tribes and clans”

And lastly,

“Learning to do is no longer about acquisition and internalisation of practical knowledge; instead, it is about the ability to create and invent practically relevant knowledge. The world is expanding, and the static and well-defined industrial age skills are being replaced by more generic capabilities that make meaningful and valuable action possible.

Learning creates progress when it expands our capabilities to be and to do things that we have reason to value. As Sen pointed out,5 our capabilities are rooted in social, cultural, and bodily contexts that are not universal. Development is about the expansion of these personal and highly contextual capabilities. Although the debate still goes on about whether some basic universal human capabilities can be defined,6 in practice progress and development are deeply subjective and highly idiosyncratic. In the Senian capability-based approach, this subjective foundation of development is linked to a universal requirement that valuations are reasonable. They cannot be purely subjective preferences or based on hedonistic fulfillment; instead, we need to be able make a coherent argument about valuations that can also be accepted as coherent by those who do not share these values.”

The Internet is Flat

When TCP/IP was adopted by the major industries- IBM, AT&T, DEC, and later CERN in the 1980s, it flattened the non-universality of competing and proprietary computer networks towards a largely universal internet backbone. This is what Thomas Friedman meant in his book, when he referred to the fiber optic internet and the personal computer’s convergence along with the rise of multinational workflow software. Important insights to glean, is that Tuomi is not wrong, but they do not address exactly what those valuations- reasonable or not- are. Nor is it possible that any single person can identify all valuations- varying valuations exist across the globe. In the West, it has traditionally been the rules-based order. Martin Gurri’s 2014 book, The Revolt of the Public addresses this worldwide phenomenon of internet-based societies with digitally enabled agency, but an earlier term from 1964 described a less idealistic take than Friedman on the emergent mass communication age: the “global village,” by Marshall McLuhan.

In my first impression of the phrase, in a completely “ideal world” (or perhaps considered dystopian, by other measures), if mass communication and universal knowledge (whether it is scientific knowledge, or cultural knowledge) were “universally” realized, embraced, easily available, and accepted by a globally connected world, then the world would be “one big happy” village. McLuhan’s skepticism to this, is based on an interview with Gerald Stearn, where he says it never occurred to him that a global village suggests uniformity and tranquility, and suggested that there is more discontinuity and division because it is far more diverse under the increase of the village conditions. It seems that around 2012, the transhumanists involved in AI research abandoned generalized poverty reduction towards a longtermist concern for future generations by taking a much more hypothetical, yet consequential view of existential risks that have not occurred yet but are sometimes being precipitated by doomerist thinking/fantasies by billionaires. In my humble opinion, a world of increasing instability is far more likely to be avoided by developing mass-reproducible means of production that ensure the technologies needed for agriculture, housing, and medicine can be distributed, and if not, studied, rather than made scarce or outlawed to perpetuate an unsustainable economic zero sum game.